One of the appeals of cloud computing is the idea of using what you need when you need. One of the ways that Amazon provides for this is through autoscaling. In essence, this allows you to vary the number of (related) running instances according to some metric that is being tracked.

In this article, we look at how you can trigger a change in the number of running instances using a custom Cloudwatch metric – including the setup of said metric, and a brief look at the interactions between the various autoscaling commands used.

Setting up a custom Cloudwatch metric

Autoscaling uses Cloudwatch alarms to trigger events, in order to use it, we therefore need a functioning Cloudwatch metric and alarm.

Cloudwatch’s basic monitoring is in 5 minute increments and measures a number of parameters that are independent of the operating system and user data, including CPU utilization, disk I/O (in operations and bytes) and network usage. Additional metrics are made available for other services (e.g. EBS volumes, SNS, etc.)

Cloudwatch metrics do not have to be precreated, nor is it necessary to allocate space for them. It is worth mentioning that you cannot delete a metric – any data saved is retained for 2 weeks. Metrics are automatically created when data is added to them.

Custom metrics are created using the ‘PutMetricData’ request. This is available as one of the CLI tools for AWS, mon-put-data:

mon-put-data

--metric-name value --namespace value [--dimensions

"key1=value1,key2=value2..." ] [--timestamp value ] [--unit value ]

[--value value ] [--statisticValues "SampleCount=value, Sum=value,

Maximum=value, Minimum=value" ] [General Options]

Note: Metrics differing in any name, namespace, or dimensions (case sensitive) are classified as different metrics.

Pass the command a metric name, namespace, credentials, and a value and you are good to go. It might take a few minutes for the results to initially show up on Cloudwatch, but from my experience it is usually only a few seconds.

It is worth noting, here, that the AWS command line tools are Java based, and have to load Java before they can do anything, which makes them quite slow. None the less, they are easy to use, and a good starting point (we’ll look at an alternate approach later).

I’ll use the example of used memory throughout this post, since it is a fairly common use case (and it can be easily adapted for other metrics).

Amazon has posted a bash script to get us started, modified slightly below:

#!/bin/bash

export AWS_CLOUDWATCH_HOME=/opt/aws/apitools/mon

export EC2_PRIVATE_KEY=/path/to/pk-XXXXXXXXXXXXXXXXXXXXXXXXXX.pem

export EC2_CERT=/path/to/cert-XXXXXXXXXXXXXXXXXXXXXXXXXX.pem

export AWS_CLOUDWATCH_URL=https://monitoring.amazonaws.com

export PATH=$AWS_CLOUDWATCH_HOME/bin:$PATH

export JAVA_HOME=/usr/lib/jvm/jre

# get ec2 instance id

instanceid=`/usr/bin/curl -s http://169.254.169.254/latest/meta-data/instance-id`

memtotal=`free -m | grep 'Mem' | tr -s ' ' | cut -d ' ' -f 2`

memfree=`free -m | grep 'buffers/cache' | tr -s ' ' | cut -d ' ' -f 4`

let "memused=100-memfree*100/memtotal"

mon-put-data --metric-name "UsedMemoryPercent" --namespace "System/Linux" --dimensions "InstanceID=$instanceid" --value "$memused" --unit "Percent"

The script takes the number from the ‘-/+ buffers/cache‘ row under the ‘free‘ column, as a percent of ‘total‘ (under the ‘Mem‘ row), and sets up one metric (UsedMemoryPercent), in the namespace ‘System/Linux’, with a single dimension (InstanceID).

Notes:

- AWS_CLOUDWATCH_HOME/bin contains the cloudwatch command line tools

- The paths I have used, above, are for Amazon’s Linux AMI

- As a personal preference, I have used curl instead of wget.

- It should also be mentioned that the bash math used above will only yield integer results.

To use the script, make it executable:

chmod +x /path/to/script.sh

Set it up to run every 5 minutes with crontab -e

*/5 * * * * /path/to/script.sh

The project ‘aws-missing-tools’, hosted on Google Code has a few more scripts, similar to the one above, for gathering other metrics.

Due to the poor performance of the AWS CLI tools, it is far more efficient to call the API directly. This can be accomplished using any of the available SDKs (e.g. PHP, Ruby, etc.). However, even an SDK seems to be overkill for one command. I came across a simple python script, from Loggly, that signs the passed parameters, and can easily be setup to put the Cloudwatch metrics. I have modified it to be a single script, and accept a value on as a command line argument:

cloudfront-mem.py:

import httplib2, sys, os, base64, hashlib, hmac, time

import json as simplejson

from urllib import urlencode, quote_plus

aws_key = 'AWS_ACCESS_KEY_ID'

aws_secret_key = 'AWS_SECRET_ACCESS_KEY_ID'

value = sys.argv[1]

instanceid = sys.argv[2]

params = {'Namespace': 'System/Linux',

'MetricData.member.1.MetricName': 'UsedMemoryPercent',

'MetricData.member.1.Value': value,

'MetricData.member.1.Unit': 'Percent',

'MetricData.member.1.Dimensions.member.1.Name': 'InstanceID',

'MetricData.member.1.Dimensions.member.1.Value': instanceid}

def getSignedURL(key, secret_key, action, parms):

# base url

base_url = "monitoring.amazonaws.com"

# build the parameter dictionary

url_params = parms

url_params['AWSAccessKeyId'] = key

url_params['Action'] = action

url_params['SignatureMethod'] = 'HmacSHA256'

url_params['SignatureVersion'] = '2'

url_params['Version'] = '2010-08-01'

url_params['Timestamp'] = time.strftime("%Y-%m-%dT%H:%M:%S.000Z", time.gmtime())

# sort and encode the parameters

keys = url_params.keys()

keys.sort()

values = map(url_params.get, keys)

url_string = urlencode(zip(keys,values))

# sign, encode and quote the entire request string

string_to_sign = "GET\n%s\n/\n%s" % (base_url, url_string)

signature = hmac.new( key=secret_key, msg=string_to_sign, digestmod=hashlib.sha256).digest()

signature = base64.encodestring(signature).strip()

urlencoded_signature = quote_plus(signature)

url_string += "&Signature=%s" % urlencoded_signature

# do it

foo = "http://%s/?%s" % (base_url, url_string)

return foo

class Cloudwatch:

def __init__(self, key, secret_key):

self.key = os.getenv('AWS_ACCESS_KEY_ID', key)

self.secret_key = os.getenv('AWS_SECRET_ACCESS_KEY_ID', secret_key)

def putData(self, params):

signedURL = getSignedURL(self.key, self.secret_key, 'PutMetricData', params)

h = httplib2.Http()

resp, content = h.request(signedURL)

#print resp

#print content

cw = Cloudwatch(aws_key, aws_secret_key)

cw.putData(params)

The above script has one dependency (httplib2) which wasn’t included in my default Python installation, and can be added with:

yum --enablerepo=epel install python-httplib2

I use the following bash script, from cron, to gather the data and call the python script.

#!/bin/bash

instanceid=`/usr/bin/curl -s http://169.254.169.254/latest/meta-data/instance-id`

memtotal=`/usr/bin/less /proc/meminfo | /bin/grep -i ^MemTotal: | /bin/grep -o [0-9]*`

memfree=`/usr/bin/less /proc/meminfo | /bin/grep -i ^MemFree: | /bin/grep -o [0-9]*`

buffers=`/usr/bin/less /proc/meminfo | /bin/grep -i ^Buffers: | /bin/grep -o [0-9]*`

cached=`/usr/bin/less /proc/meminfo | /bin/grep -i ^Cached: | /bin/grep -o [0-9]*`

let "memusedpct=100-(memfree+buffers+cached)*100/memtotal"

/usr/bin/python /path/to/cloudfront-mem.py $memusedpct $instanceid

The absolute paths are used to avoid errors with cron. Of course, the above scripts have no real error checking in them – but they do serve my purposes quite well.

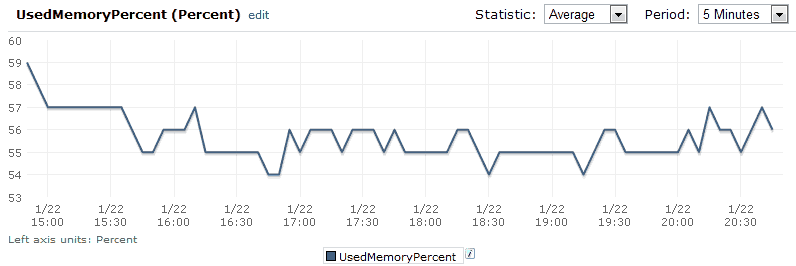

Hopefully, once you are up and running, you can see something like the following, in CloudWatch:

Setting up Autoscaling

The setup of autoscaling is the same for custom metrics or existing instance-metrics.

For ease of use (i.e. so we don’t have to pass them to every command), we should set (export) either:

- AWS_CREDENTIAL_FILE or

- both: EC2_PRIVATE_KEY and EC2_CERT

The CLI tools for autoscaling are sufficient for our needs, since we only have to run them once – from the command line – and not multiple times.

Create the launch config

This step sets up the EC2 instance to launch – therefore, it resembles the call to ec2-run-instances. As with the run command, you must pass an AMI and instance type, but can also specify additional parameters such as a block device mapping, security group, or user data.

as-create-launch-config

LaunchConfigurationName --image-id value --instance-type value

[--block-device-mapping "key1=value1,key2=value2..." ]

[--monitoring-enabled/monitoring-disabled ] [--kernel value ] [--key

value ] [--ramdisk value ] [--group value[,value...] ] [--user-data

value ] [--user-data-file value ] [General Options]

For example, to create a launch config called ‘geek-config’ which will launch an m1.small instance based on the 32-bit Amazon’s Linux AMI (ami-31814f58), using the keypair ‘geek-key’ into the security group, ‘geek-group’, we would use the following:

as-create-launch-config geek-config --image-id ami-31814f58 --instance-type m1.small --key geek-key --group geek-group

Note: it is acceptable to use the security group name unless launching into VPC, in which case you must use the security group id.

Create the autoscaling group

Here we define the parameters for scaling – for instance, the availability zone(s) to launch into and the (upper and lower) limits on the number of instances and we associate the group with the launch configuration we created previously. This command also gives us a chance to setup loadbalancers if needed, to specify a freeze time on scaling (i.e. while the group size is being adjusted), and to start with a number of instances other than the minimum value.

as-create-auto-scaling-group

AutoScalingGroupName --availability-zones value[,value...]

--launch-configuration value --max-size value --min-size value

[--default-cooldown value ] [--desired-capacity value ]

[--grace-period value ] [--health-check-type value ] [--load-balancers

value[,value...] ] [--placement-group value ] [--vpc-zone-identifier

value ] [General Options]

For example, to have a group (‘scaly-geek’) start with 2 instances and scale between 1 and 5 instances based on the above launch config, all of them in the us-east-1a region, with a 3 minute freeze on scaling, we would use:

as-create-auto-scaling-group scaly-geek --availability-zones us-east-1a --launch-configuration geek-config --min-size 1 --max-size 5 --cooldown 180 --desired-capacity 2

Note: if you do not specify a –desired-capacity then the –min-size number of instances will be used)

Create a policy to scale with

This command allows us to define a new capacity – either via a change (numerical or percent) or by specifying an exact number of instances, and associates itself with the scaling group we have created previously. Negative numbers are used to represent a decrease in the number of instances. This policy will be referenced by its Amazon Resource Name (ARN) and used as the action of a Cloudwatch alarm. We can create multiple policies depending on our needs, but at least two policies – one to scale up and one to scale down – are common.

as-put-scaling-policy

PolicyName --type value --auto-scaling-group value --adjustment

value [--cooldown value ] [General Options]

Acceptable values for –type are: ExactCapacity, ChangeInCapacity, and PercentChangeInCapacity; the --cooldown value specified here will override the one specified in our scaling group, above.

ARNs refer to resources across all the AWS products and take the form:

arn:aws:<vendor>:<region>:<namespace>:<relative-id>

Where:

- vendor identifies the AWS product (e.g., sns)

- region is the AWS Region the resource resides in (e.g., us-east-1), if any

- namespace is the AWS account ID with no hyphens (e.g., 123456789012)

- relative-id is the service specific portion that identifies the specific resource

Not all fields are required by every resource

Sample Output:

POLICY-ARN arn:aws:autoscaling:us-east-1:0123456789:scalingPolicy/abc-1234-def-567

It is important to note down the ARN returned by this command as it will be needed in order to associate the policy with a cloudwatch alarm. Each time you run the command you will get a unique ARN.

For example, to create a (scale-up) policy (LowMemoryPolicy), based on our scaling group from above, where we want to add one instance, we would use:

as-put-scaling-policy LowMemPolicy --auto-scaling-group scaly-geek --adjustment=1 --type ChangeInCapacity

To do the same, but scale down (by one instance) instead, we would use:

as-put-scaling-policy HighMemPolicy --auto-scaling-group scaly-geek --adjustment=-1 --type ChangeInCapacity

Note: you can test your policy by using the command as-execute-policy:

as-execute-policy

PolicyName [--auto-scaling-group value ]

[--honor-cooldown/no-honor-cooldown ] [General Options]

Create Cloudwatch Alarms

This is the final step – tying everything together. We have collected data in Cloudwatch, and we will can setup an alarm to be triggered when our metric breaches the target value. This alarm will then be setup to perform one or more actions, specified by their ARN(s). In our case, the alarm will trigger a scaling policy – which will then change the number of instances in our scaling group.

As with our scaling policies, there can be multiple alarms – in our case, two – one to define the lower bound (and trigger our scale-down policy) and one to define the upper bound (and trigger our scale-up policy).

mon-put-metric-alarm

AlarmName --comparison-operator value --evaluation-periods value

--metric-name value --namespace value --period value --statistic

value --threshold value [--actions-enabled value ] [--alarm-actions

value[,value...] ] [--alarm-description value ] [--dimensions

"key1=value1,key2=value2..." ] [--insufficient-data-actions

value[,value...] ] [--ok-actions value[,value...] ] [--unit value ]

[General Options]

Notes:

--metric-nameand--namespacemust match those used to create the original Cloudfront metric.--periodand--evaluation-periodsare both required. The former defines the length of one period in seconds, and the latter defines the number (integer) of consecutive periods that much match the criteria to trigger the alarm.- Valid values for

--comparison-operatorare:GreaterThanOrEqualToThreshold,GreaterThanThreshold,LessThanThreshold, andLessThanOrEqualToThreshold - Valid values for

--statisticare:SampleCount,Average,Sum,Minimum,Maximum

For example, to create an alarm which will trigger a scaling policy whenever our UsedMemoryPercent averages over 85% for 2 consecutive 5 minute periods we would use:

mon-put-metric-alarm LowMemAlarm --comparison-operator GreaterThanThreshold --evaluation-periods 2 --metric-name UsedMemoryPercent --namespace "System/Linux" --period 300 --statistic Average --threshold 85 --alarm-actions arn:aws:autoscaling:us-east-1:0123456789:scalingPolicy/abc-1234-def-567 --dimensions "AutoScalingGroupName=scaly-geek"

For the alarm which will trigger our scale-down policy once our UsedMemoryPercent averages below 60% for 2 consecutive 5 minute periods we would use:

mon-put-metric-alarm HighMemAlarm --comparison-operator LessThanThreshold --evaluation-periods 2 --metric-name UsedMemoryPercent --namespace "System/Linux" --period 300 --statistic Average --threshold 60 --alarm-actions arn:aws:autoscaling:us-east-1:0123456789:scalingPolicy/bcd-2345-efg-678 --dimensions "AutoScalingGroupName=scaly-geek"

Just to clarify, the aggregation (--statistic) is performed over a single period – and its result must compare (--comparison-operator) to the threshold for the specified number of consecutive periods (--evaluation-periods).

Two following two AWS CLI commands are helpful in debugging errors with autoscaling:

mon-describe-alarm-history

[AlarmName] [--end-date value ] [--history-item-type value ]

[--start-date value ] [General Options]

as-describe-scaling-activities

[ActivityIds [ActivityIds ...] ] [--auto-scaling-group value ]

[--max-records value ] [General Options]

Notes

- The use of as-create-or-update-trigger is deprecated and should be avoided.

- You can list your existing policies using as-describe-policies and delete policies with as-delete-policy

- Alarms will display as red lines on your Cloudwatch graphs.

- The namespace, metric-name, and dimensions must match exactly

References

Run the CLI commands with the --help parameter to see the details of the available options.

Thank you for your post.

I think that the following code will be modified.

url_string += “&Signature=%s” % urlencoded_signature

=> url_string += “&Signature=%s” % urlencoded_signature

Absolutely – thanks for point that out – ampersands don’t always seem to make it into my posts intact. Hopefully that is the only error that there is in it (I should put up a downloadable copy of the script actually).

This doesn’t tie together.

When “Setting up a custom Cloudwatch metric” you need to push that data into the autoscaling group (rather than an instances metrics) so the AutoSclaing group alarm has data to work off. I am getting the “Insufficient Data” warning for this setup. Perhaps something has changed enough since this tutorial was made to affect this, but the logic wouldn’t have changed.

Here (http://blog.domenech.org/2012/11/aws-ec2-auto-scaling-external.html) we have:

–namespace “AS:$ASGROUPNAME”

I am working on using that although the semi-colon trips up the awscli (Python tools).

Thoughts?

It looks like this

–dimensions “AutoScalingGroupName=scaly-geek”

Needs to be in the mon-put-data command, like so:

mon-put-data –metric-name “UsedMemoryPercent” –namespace “System/Linux” –dimensions “AutoScalingGroupName=scaly-geek” –value “$memused” –unit “Percent”

(or the aws cloudwatch put-metric-data if one is using the new awscli tools)

What you have is good for capturing single instances metrics and I am using that (thanks!) but for autoscaling the metrics need to be all put into the same dimension from all the machines in the AutoScaleGroup.

Thanks for the post though, it got me most of the way.

Thanks for the updates – it has been a while since I looked into autoscaling metrics. Good to know it at least got you on the right track.